Evolution of Hosting: Bare Metal vs. Dedicated Server vs. Cloud

We want to explore what is the real difference between bare metal, dedicated server, and cloud services. But before that, let’s quickly refresh history.

Hosting Industry Evolution

Back in 1999, when the first web hosting companies started to appear, things looked quite different from today: at best, you would get a megabyte of space and an FTP account. That was good for a simple HTML website with a couple of pages with moving GIFs. These days a simple “under construction” placeholder might consume as much space as the whole DOOM2 game. To run your website, you might need a multi-tier infrastructure with web servers, load balancers, caching servers, and whatnot running on top of containers orchestrated by Kubernetes and would then proceed to push updates via Gitlab.

By the time the Dot-com bubble exploded, we already had a couple of Internet properties that later became household names: Yahoo, Amazon, Google, AOL, Netscape. It was also a time of hard realization that not all businesses can readily move online, be it for logistics or other reasons, as what happened with pets.com.

One thing is for sure: even back in those days, investors realized that one, if not the most valuable aspect, is the number of users or traffic that a particular Internet property brings. I am thinking about the Netscape deal, which AOL bought when it was worth $10 billion. Who would ever think of having an Internet browser valued at $10 billion? But then again – it wasn’t just a regular browser deal. Overall it was a crazy late ’90s, a norm to learn something new every day by failing or winning big by unlocking something never done before.

However, nothing would be out there today without yesterday, so all the Internet history of the ’90s makes much sense of today’s Internet. It also reminds me of my childhood when you use IRC or to browse at 16.6kbps speed and playing the cracked version of Duke3D. Later 56.6 kbps was instantly fast and MMX technology the things a little bit upside done, but not much.

Let’s get back to the original topic of how the hosting industry evolved and how the large enterprises became large owning most of the Internet, like tier 1 IP transit providers or large-scale data center networks, including the hyperscalers. The Internet infrastructure that we use today is so virtualized and hidden behind layers of abstraction, that unless you are an engineer in this field, you wouldn’t know issues surrounding IPv4 exhaustion, types of hardware that serve your content or the way packets reach those endpoints and back to your pocket’s mobile phone. But then again, should you care?

Here’s a quick timeline of significant events leading to where we are today.

1995

- Geocities was the first web hosting service with 1 MB size of webspace. 1 MB! What could you fit in 1 MB these days?

- Angelfire, at the same time, offered 35 KB of webspace.

- Tripod was the guy with the biggest muscles and got down to 2 MB of web space size.

- Then some decent companies started to appear, and Dreamhost began to operate from 1999. That was the company that I was following since I liked how they position their FTP agent or how they engage with their customers. That was a different way of offering the hosting service.

The 2000s

- 2001 SWsoft’s Virtuozzo released

- 2003 BlueHost appeared in the global Internet arena, and it became the largest N. American shared hosting provider.

- Then the individual content/blog platforms like Blogger and LiveJournal appeared, and everyone was able to host their content.

- HostGator was another big hoster who, at some point, had 1% of all the Internet traffic.

- From mid-2003 hosting control panels Confixx, Plesk and others appeared, simplifying the ways a non-technical person could manage this server

- Around 2005 Softlayer is Founded, that went on to become one of the largest automated bare metal providers in the world

2006

- 2006 onwards saw something rather important: Amazon launched AWS with a trinity of core offerings – EC2, S3, and SQS

- Ten years later this was already a $5B business and profitable

- For other big players, specifically Microsoft and Google this was an iPhone moment: suddenly realizing you either go all-in to cloud infrastructure or you are out

- Still, back in pre-2010, no one saw this would become THE cloud provider. Things were much more diverse, with hundreds of providers offering both virtual and physical computer resources along with easy to use control panel. What no one did, however, was aggressive development of enterprise features, such as geo-redundancy, cloud file stores, all of which were under one unified API.

2008

- KVM (Kernel Virtual Machine) merged into the Linux kernel mainline in kernel version 2.6.20, which released on February 5, 2007

- Microsoft Hyper-VThe finalized version was released on June 26, 2008, and delivered through Windows Update.

- There’s no hiding anymore – virtualization is here to stay, and it’s now in every Microsoft Windows Server and Linux installation.

2010 (The Hypervisor and Cloud Orchestration Year)

- That was a year when Xen (the actual release was 2003) and Citrix picked up, but after several years, OpenStack got its traction when Rackspace started to work on it very intensively.

- Citrix donated CloudStack to the Apache Software Foundation on April 2012

2012

- Heavy-duty data centers like Equinix, InterXion, Digital Realty are the most prominent market players in the data center arena.

- Many smaller providers start to consider providing the AWS or Azure connected services, because how can you compete with them.

- Interesting fact, Azure and Google Cloud gained a lot of market share from AWS, while Oracle was sleeping and did not react to the changing hosting world environment.

- At the same time, Rackspace, which was a massive cloud, provide got wiped out as the leading cloud provider. Rather than fighting in the mid/high-end cloud market, the effort shifted towards supporting AWS and offering value-added integration and consultation services.

- SDN (software-defined network) got its momentum to be the ground of the new networking foundation.

- SDS (software-defined storage) because the local storage is not enough, and that picked up as well since the network got some fast (you can push more data over the net than from the single SSD disk these days). Open-source projects like Ceph gain traction.

2018

- We saw a lovely performance of the Packet. The guys made an excellent debut on structuring the bare metal as a cloud offering and finally selling the entire business to Equinix, who thinks about the future entering the bare metal industry or coping with their current needs, smart move both ways. By the way, Packet incorporated in 2014, but it’s got its momentum very shortly after that, and 2018 became a dominating year for them.

- You can also see how the software changed the actual management of the software, what I mean by that is Kubernetes, Docker, Terraform or LibCloud dominating in today’s market in managing the large scale infrastructure.

- Many providers started to provide the hyperscalers advanced support like Rackspace does now.

- The market got divided into 60%-40%, 60% of them are hyperscalers, and the rest are the others. However, the market is significant and still growing fast, which brings many opportunities for the end customer and the hosting provider.

2020 (2021 Included)

- Not to mention the 5G and IoT (I probably needed to include them for 2018), which is also a vast thing picking up. I hope this is not going to be some bubble-like VR was, where everyone thought that VR is the next big thing, but how can it be, then you need to wear those ugly and massive Oculus goggles.

- The situation remains the same as in 2018. Still, we see much more utilization of the infrastructure stacks, and everyone competes in the open-source market, which appears to be profitable if you do it right.

- Many big players (data centers, hyperscalers, and others, even the IP transit guys) started to pay attention to the small companies or even GitHub users how they interact and what technology stacks they use to adjust their offerings.

- The market became the application-driven place where the tendency is to connect applications but not the hardware.

So where will this bring us? Will we have a couple of hyperscalers in control of Internet infrastructure, will we have everything automated? Probably not, but you have to be as inventive as ever these days to gain a spotlight.

Applications & Resource Needs (Today)

Mentioning the intense software domination in the market, we see the significant traction of different services in use. Now, talking about the applications, we can see a substantial increase in the increase in the resources for the different types of applications. Let’s take an example of a simple WordPress hosting if you need to host one, and then you need a dedicated server or maybe cloud? But do you want a self-managed service or managed service, where you would not have any hassle with the downtimes?

Blogger / Website Owner

It is where the whole matrix of questions begins. If you are just a blogger, you probably will go after medium.com service, where many audiences can reach your content, and you do not need to blog like crazy to target the specific keywords to gain better rankings in Google. However, if you are the company and you want to host your website with the blog inside it, then there is a chance that you would go with the headless CMS (which is, by the way, the new term, that even Wikipedia hardly picks up) it includes the blog feature. What I mean by that, you do not need the server or cloud server for such needs, since the wheel is already up there for such requirements and you do not need to have a pain installing or configuring the physical or virtual machine to get things moving. One of our clients, PressLabs, offers WordPress hosting, which has an enterprise-grade providing the WordPress hosting, and the bloggers or site owners do not need to have all that hassle maintaining the actual server configuration.

Hosting Provider

If you are the hosting provider, you either go with the Terraform, LibCloud, or WHMCS (where the modules are available). Integrate the third parties unless you want to do a hardcore way and try to manage everything yourself, which makes no valuable sense at all in today’s market.

Infrastructure Provider

Then we can think about the infrastructure, and this is where the hardest part begins. In a way, this is the hardest part of all the chain, because for a couple of reasons:

- I want to express my immense appreciation to all the Heficed team giving their best to provide an excellent service to our valuable customers (it should be #1).

- You have the most significant competition.

- You have to be in line with today’s technologies to adapt your infrastructure to many environments that are available in the market.

- You have to have the API available with SDK and many other crazy things to be aligned. See, the software focused infrastructure is the key, and you only will win (the small wins will convert into the big ones).

- Many options of the bare metal options should be available for the different cases, that includes GPU.

In different cases, there are various options to consider. If you find going with the actual infrastructure stack, you should keep in mind how much effort you will need of your DevOps time to maintain the real environment. In most cases, it is better to consider the infrastructure that would be available on-demand, which means easily scalable resources should be the key and considering (of course) how complex your solution is. As (this is true), many of our customers say that Heficed is more stable with cloud services than Google Cloud. Still, digging deeper into Google Cloud service idealogy, their aim is not the uptime, but the flexibility of the application. This is the perfect upsell for more infrastructure and which means that you should code your apps that way if the primary server crashed, the secondary would boot up and resume the application. In other words, this is called redundancy (sort of).

So, it would be best if you had to analyze and plan your application utilization and plan very carefully to make sure you would have a small amount of time managing the actual infrastructure, instead of the application, which is important to consider.

Automation in the Nutshell

The next big thing is automation. Having a server with just root access is not enough. You will run into the problem when you would need the specific boot configuration or some advanced feature installed into the server BIOS, by the way, sometimes the simple BIOS update makes much magic.

Today’s infrastructure provider should have decent automation available with the secure IPMI (which would have a local address like 10.xx.xx.xx). Otherwise, you will end up with an insecure server environment, and trust me hackers do not sleep with the port or IP scanners. The Internet is full of them.

There are super enterprise solutions, or you can choose an efficient provisioning service that does entirely the same. Still, everything was done in-house or based on the open-source.

The most important thing you have to evaluate is to know what you need, but besides that, automation always comes in handy to minimize the management time of the server. However, there is not much standard in this area. I would say cloud infrastructure has the best practices where the majority of the market uses OpenStack, CloudStack, or VMWare. Taking into consideration bare metal is still in the stage where many infrastructure providers try to develop their bare-metal provisioning applications. It is how cloud glued up with the most significant market stakeholder of the orchestration.

True Comparison

Physical Servers / Dedicated Servers

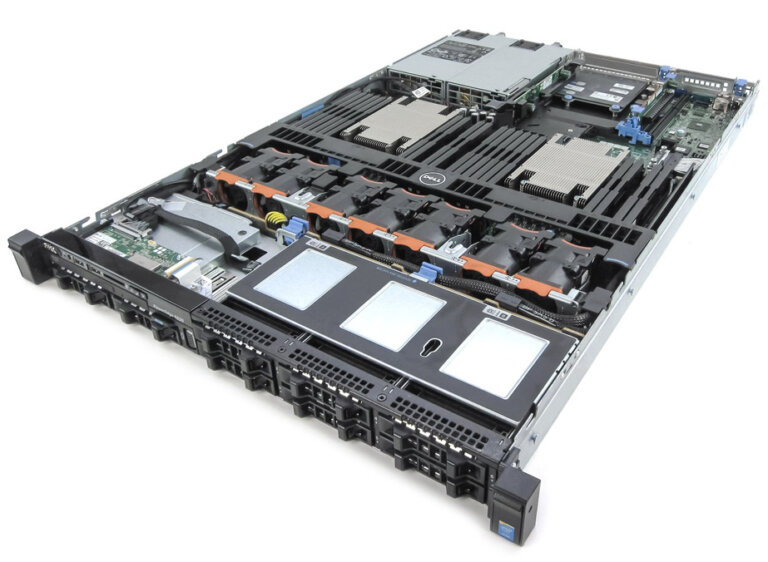

How does bare metal compare to dedicated server and cloud? Let’s look at the hardware first. Physical servers are the most common 1U and 2U sizes (the typical rack space is 42U). The image down below shows the 2U server, the downside of it, that you can’t physically detach it into small physical machines. Those types of servers are for a single application or hypervisors in the cloud infrastructure. Also, it is trendy for the higher specification server needs. These days you can fill up to 1.5TB (in some cases even more) of DDR4 RAM and use either Intel or AMD CPUs, including the GPU.

Multi-Modular Systems / Bare Metal

Then you have the most commonly used 4U size server chassis, which is widely used for bare metal infrastructure. It’s a popular solution because you can fit from 8 to 24 server modules and have much better power management. Including the smaller size of and more efficient chassis, wherein each server module you can fit from 128 GB to 196 GB of RAM, the most common CPUs that are in use for such server modules are Intel’s E3, E5, and Xeon families.

So, although seemingly the same, the differences between dedicated and bare metal servers are significant.

Cloud Infrastructure

In regards to the cloud infrastructure, it’s much more diverse.

For hyperscalers, it’s not feasible anymore to scale on off-the-shelf hardware. When you are in the realm of tens of thousands of servers, every expense (be it a nice badge on a server, or an extra unused port) matters, so servers are tailor-built for specific tasks. They made for no-fuss maintenance and maximum efficiency. An Open Compute Project, with participants ranging from Facebook Inc. to NVIDIA, share their efficient network and compute gear designs with an industry. Microsoft is known to integrate FPGA accelerator boards in their machines for Machine Learning acceleration. At the same time, Amazon acquired Annapurna Labs and built custom accelerator boards to offload Elastic Compute EC2 networking, storage, security, and hypervisor functions from the CPU.

For a local boutique cloud provider, such development is out of reach due to prohibitive R&D costs; however, not all is lost. Firstly, as aforementioned, we all have access to an Open Source KVM hypervisor, which is at the core of every Linux installation these days. That allows us to create the primary hypervisor-based Virtual Machine – the building block of a “cloud “infrastructure. Then we have software-defined storage, such as Ceph or OpenIO. Open vSwitch can do the packet switching, while Quagga or VyOS do the routing. The list goes on, and it won’t be surprising that some parts of this available software stack are used to some degree by hyperscalers themselves.

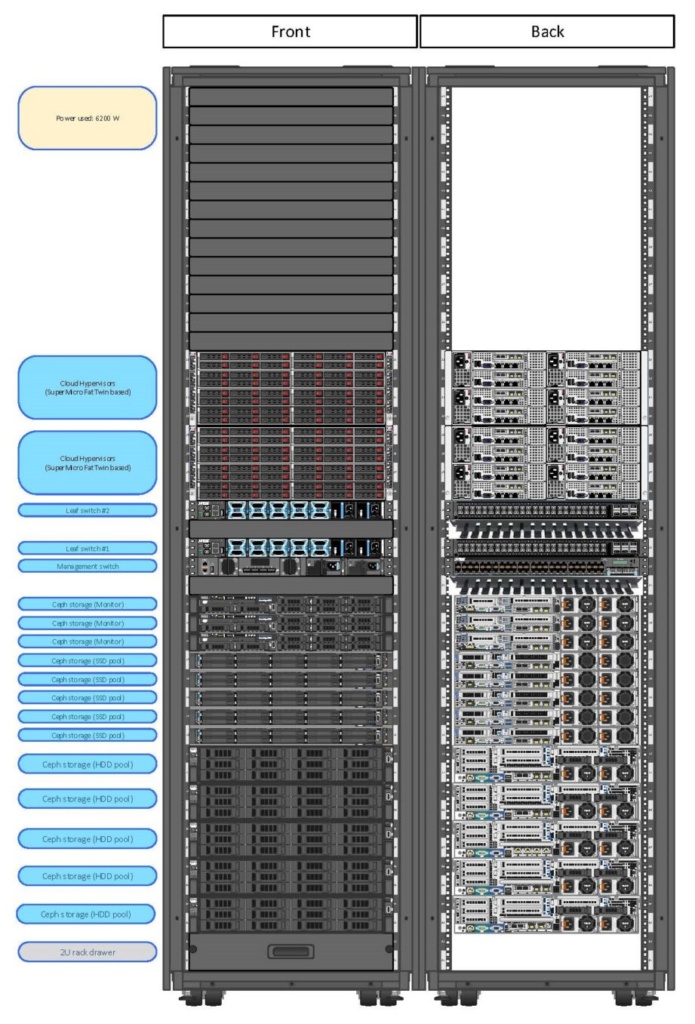

As for the hardware, options are mostly off-the-shelf, as discussed above—nevertheless, field-proven by many years in operation. An example of our cloud setup from 2015, shown in the image below, has the hypervisors, storage servers, and a couple of switches. Hypervisors run KVM and are network-booted. On the storage side, we have a CEPH cluster that is connected to hypervisors over the network. The current architecture is slightly different, but the idea stays the same: hypervisors run end-user virtual machines, while storage cluster provides adequate space and IOPs to serve them and allow for seamless migration between the physical nodes. Hardware is sourced from SuperMicro/Dell, while networking gear is Juniper/Arista.

That is the main difference comparing bare metal, dedicated servers, and cloud. In other words, running a bare-metal system or dedicated server stack is much less complicated maintenance. At the same time, cloud infrastructure has a much larger capacity requirement and a much more complicated setup.

Summary

At the end of the day, it doesn’t matter which solution you would be using, the most important fact is to choose the right provider for your business, who understands your business project needs and can deliver a solution. The answer to the question of which provider you need to use will explain in my next blog post where I would like to take a closer look at infrastructure versus service.